This is the most essential SEO feature of all time. Google must index your site in order for your site get any organic traffic from Google. If your site isn’t indexed, you are lost. No one will find your content organically, because it’s not part of Google’s search index.

The first step to fixing an indexing issue is diagnosing the indexing issue. This list will help you do just that.

I’ve roughly organized this list from most common to least common. You can work through the list from top to bottom, and you’ll find your cause and cure.

1. Your Site is Indexed Under a www- or Non-www Domain

Technically www is a subdomain. Thus, http://example.com is not the same as http://www.example.com. Make sure you add both sites to your GWT account to ensure they are both indexed. Be sure to set your preferred domain, but verify ownership of both.2. Google Hasn’t Found Your Site Yet

This is usually a problem with new sites. Give it a few days (at least), but if Google still hasn’t indexed your site, make sure your sitemap is uploaded and working properly. If you haven’t created or submitted a sitemap, this could be your problem. You should also request Google crawl and fetch your site. Here is Google’s instructions on how to do that:- On the Webmaster Tools Home page, click the site you want.

- On the Dashboard, under Crawl, click Fetch as Google.

- In the text box, type the path to the page you want to check.

- In the dropdown list, select Desktop. (You can select another type of page, but currently we only accept submissions for our Web Search index.)

- Click Fetch. Google will fetch the URL you requested. It may take up to 10 or 15 minutes for Fetch status to be updated.

- Once you see a Fetch status of “Successful”, click Submit to Index, and then click one of the following:

- To submit the individual URL to Google’s index, select URL and click Submit. You can submit up to 500 URLs a week in this way.

- To submit the URL and all pages linked from it, click URL and all linked pages. You can submit up to 10 of these requests a month

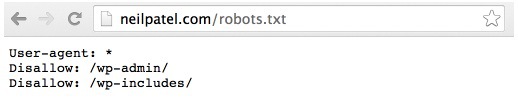

3. The Site or Page(s) are Blocked With robots.txt

Another problem is your developer or editor has blocked the site using robots.txt. This is an easy fix. Just remove the entry from the robots.txt, and your site will be reappear in the index. Read more about robots.txt here.

4. You Don’t Have a sitemap.xml

Every website should have a sitemap.xml, which is a simple list of directions that Google should follow to index your site. You can read about Google’s Sitemap policy, and create one pretty easily.If you are experiencing indexation issues on any portion of your site, I recommend that you revise and resubmit your sitemap.xml just to make sure.

5. You Have Crawl Errors

In some cases, Google will not index some pages on your site because it can’t crawl them. Even though it can’t crawl them, it can still see them.To identify these crawl errors, go to Google Webmaster Tools → Select your site, → Click on “Crawl” → Click on “Crawl Errors”. If you have any errors, i.e., unindexed pages, you will see them in the list of “Top 1,000 pages with errors.”

6. You Have Lots of Duplicate Content

Too much duplicate content on a site can confuse search engines and make them give up on indexing your site. If multiple URLs on your site are returning the exact same content, then you have a duplicate content issue on your site. To correct this problem, pick the page you want to keep and 301 the rest.It sometimes makes sense to canonicalize pages, but be careful. Some sites have reported that a confused canonicalization issue has prevented indexation.

7. You’ve Turned On Your Privacy Settings

If you have a WordPress site, you may have accidentally kept the privacy settings on. Go to Admin → Settings → Privacy to check.8. The Site is Blocked by .htaccess

Your .htaccess file is part of your website’s existence on the server, which allows it to be available on the world-wide web. The .htaccess file is written in Apache. Although .htacess is handy and useful, it can be used to block crawlers and prevent indexation.9. The Site Has NOINDEX in the Meta Tag

Another way of saying “no” to the robots, and thus not having any indexation, is to have noindex meta tags. It often looks like this:<META NAME=”ROBOTS” CONTENT=”NOINDEX, NOFOLLOW”>

This is one of those issues where you’re like, “Oh, shoot, I can’t believe I didn’t see that!” Here’s what Barry Schwartz wrote about it in SEO Roundtable:

Heck, I see it all the time in the forums. I’ve been called by large fortune 500 companies with SEO issues. I’ve seen more than once, they have a noindex tag on their home page causing the issue. Sometimes they are hard to spot due to redirects, so use a http header checker tool to verify before the redirects. But don’t overlook the obvious, check that first.

Remove this line of code, and you’ll be back in the index in no time.

10. You Have AJAX/JavaScript Issues

Google does index JavaScript and AJAX. But these languages are not as easily indexable as HTML. So, if you are incorrectly configuring your AJAX pages and JavaScript execution, Google will not index the page.11. Your Site Takes Forever to Load

Google doesn’t like it if your site takes an eternity to load. If the crawler encounters interminable load times, it will likely not index the site at all.12. You Have Hosting Down Times

If the crawlers can’t access your site, they won’t index it. This is obvious enough, but why does it happen? Check your connectivity. If your host has frequent outage, it could be that the site isn’t getting crawled. Time to go shopping for a new host.13. You Got Deinedexed

This one is really bad.If you got hit with a manual penalty and removed from the index, you probably already know about it. If you have a site with a shady history (that you don’t know about) it could be that a lurking manual penalty is preventing indexation.

If your site has dropped from the index, you’re going to have to work very hard to get it back in.

This article is not an attempt to discuss all the reasons for a manual penalty. I refer you to Eric Siu’s post on the topic. Then, I advise you to do everything within your power to recover from the penalty. Finally, I recommend that you play a defensive game to prevent any further penalty, algo or manual.

Conclusion

Indexation is the keystone of good SEO. If your site or certain pages of your site aren’t indexing, you need to figure out why.Courtesy of http://www.searchenginejournal.com

Thanks for this post. You can read about Microsoft Compatibility Telemetry Windows 10 on my blog.

ReplyDeleteeat stop eat reviews

ReplyDelete